DQC State of the Art Today: the TLDR Overview

14th of October 2025

In just seven years, DQC went from theoretical sketches to experimental claims of distribution, though we are still far from practical DQC. This post lists what's missing, what we have, and what you can actually try today.

Xanadu's Aurora, announced about a year ago, was the first claim of a distributed quantum computer. The device itself has not been publicly released, but its photonic architecture is interesting because photons are the flying qubits that naturally carry quantum information between modules. In a sense, photonic quantum computers are distributed by design. You can move atoms or ions between locations by optical transport, but that approach is unlikely to scale over long distances, which is why most visions of quantum networking rely on photons as the carriers of entanglement.

Oxford's recent experiment claiming a distributed algorithm (technically a teleportation between two processors rather than a computation across them) marked another small but meaningful step.

In just seven years, the field has moved from theoretical sketches to physical demonstrations of distribution.

Still, we are missing the shared backbone that would let us test these ideas end-to-end. We have early-stage quantum computers and networks, but they rarely meet within a single experimental setup. A real shame, especially given that many institutions hold both under the same roof.

Given that public distributed testbeds are non-existent, we cannot yet benchmark distributed workloads or reproduce results across groups. This also means that the measurements required to calibrate distributed quantum simulators are non-existent.

On the other side of the spectrum, compiler stacks that treat communication as a first-class resource with explicit costs are few, and even fewer integrate real network models. Error models for quantum channels are scattered and hard to compare across platforms. Orchestration layers that manage heterogeneous devices and track entanglement as a consumable are only starting to appear.

In other words, we are missing the shared infrastructure and public access that would make distributed quantum computing a testable reality. So what are researchers doing in the meantime?

Emulation & Simulation

Emulation refers to running real quantum programs today while mimicking distribution mechanics.

Simulation refers to classically modeling quantum devices and networks.

While we wait for accessible distributed hardware, we can emulate quantum channels through two methods: - Knitting:

Today we run subcircuits separately and stitch them with classical data.

This is often known as circuit knitting.

Qiskit's cutting addon is perhaps the most popular software package for this.

The idea is to run subcircuits sequentially, sample them many times, and feed those measurements as inputs to the next.

The method breaks inter-partition entanglement and scales poorly,

but for a long time it was the only practical option.

- Ladders:

A more recent alternative consists of chaining many CNOT hops between distant regions of a chip, somewhat emulating the role of a noisy quantum link without breaking entanglement.

If you want to explore DQC in simulation, these are common choices:

- NetSquid (TU Delft): considered state of the art for quantum network simulation. Closed source, so internals are not directly inspectable or modifiable unless you collaborate.

- SeQUeNCe (Argonne Labs): an open-source counterpart. It models realistic network layers and lets you build distributed protocols from components.

- Qiskit Addon: supports classical emulation of circuit cutting inside Qiskit. Fast to try. Not a physical network simulator.

The Noisy Reality

Noise is the main reason simulators and emulators fall short. It dominates fidelity and defines whether any distributed computation survives execution. Early studies already show that network channels can inject up to an order of magnitude more loss than local gates and measurements [Campbell et al. 2022], when modeled with the same internal noise levels. The difference comes from how network noise behaves: it depends on distance, link quality, timing, and how qubits are moved or entangled across space. That spatial dependence cannot be emulated within a single chip, which makes calibration against real hardware impossible for now.A second challenge is heterogeneity. Distributed systems will likely link devices built on entirely different technologies, each with its own strengths and weaknesses. One node might operate with trapped ions and include native error correction; another could use superconducting qubits with faster but noisier gates. Synchronizing these devices means managing multiple noise profiles and aligning control layers that were never designed to talk to each other. We can barely achieve that level of coordination even within monolithic setups.

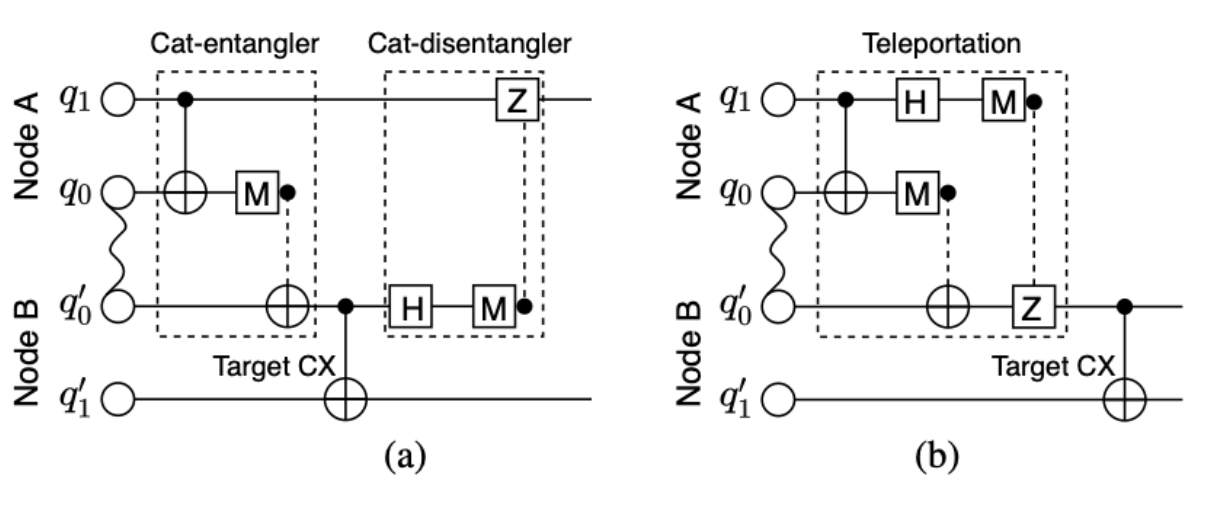

Finally, all distribution costs reduce to communication. Moving quantum information between modules requires well-defined primitives and clear models of how they affect fidelity and runtime. Two remain central today:

- TP (teleportation): transfers quantum states using shared entanglement and classical communication. Under limited capacity, it often behaves like a non-local swap to enable local operations.

- CAT (gate teleportation): transfers the *action* of a gate using an entangled resource, often a GHZ or cat state.

An open letter to anyone who will read: make a testbed

Almost every quantum hardware company out there talks about scalability, often directly pointing to DQC and quantum networking. Today we have theory, prototypes, simulators that claim to capture the future, and more importantly, an eager and growing interest from the research community. What we lack is a place where they can meet, a baseline to test and compare. We need a true distributed quantum testbed. One that would let us connect real processors (that are computationally relevant +50 qubit nodes), quantify network noise in real distributed computation settings, and check whether our emulations reflect physical reality. One that could turn distributed quantum computing from a concept into a science.-> If you are a lab with both a quantum computer and a quantum network, connect them. You'll be one of the first to do so.

-> If you are a company connecting multiple devices, open a fraction of that infrastructure for research access. This is, in my personal view, how IBM got the monopoly it currently holds over quantum cloud access. It was the first company that opened the door in an accessible manner. The time is coming for DQC, gain that terrain.

-> If you are funding national or academic programs, build and make node pairs public. Shared hardware is how we build shared truth.

Until we can execute protocols on connected machines, DQC will remain a theoretical promise. The missing link between vision and validation is a public testbed. Build it (and let me know how it goes)!

References

- [Wu et al. 2022] AutoComm: A Framework for Enabling Efficient Communication in Distributed Quantum Programs

- [Campbell et al. 2022] Quantum data centres: a simulation-based comparative noise analysis